Roleplay with Generative AI

Practice in over 40 roleplay scenarios enhanced with AI, including difficult conversations, sales pitches, interviews, negotiations, and debates.

The roleplay learning journey

1. Practice with roleplays

Practice your communication skills in a manner that mimics real-life interactions.

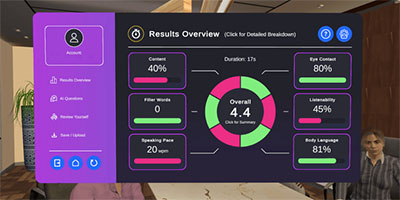

2. Feedback on your session

Receive instant AI-powered feedback on your roleplay session.

3. Reflect with a coach

Reflect on your performance with your personal AI coach.

Popular training scenarios

Roleplay categories available:

The AI roleplays are available both online and in VR. Supported in 15+ languages.

AI-powered feedback

Get feedback on what you say and any areas you need to improve with our generative AI solution. This feedback is enabled for all our roleplay scenarios, from difficult conversations to sales pitching.

AI coaching after practice

Strengthen your communication skills in 50+ professional scenarios — then unpack your performance with Hugh, your personal AI coach, to reflect, refine, and turn insights into real-world improvements.

Learn more about the AI Coach.

Customize with Roleplay Studio

Add specific roleplay scenarios for your employees or students to practice. These can range from a legal deposition to dealing with an angry employee.

Only students or employees enrolled in your organization will see your custom prompts.

Learn more about Roleplay Studio

Multi-avatar roleplays

Engage with multiple AI-driven avatars simultaneously to master a new range of complex, real-world interactions.

- Interview Scenario: Handle panel-style interviews with confidence

- Sales Pitching to a Panel: Convince multiple stakeholders in one go

- Difficult Conversations: Navigate interdisciplinary workplace discussions

- Pitch Masters: Step into a Shark Tank-style pitch room

Roleplay Rooms

Meeting Room

Office

Cafe

Doctor's Office

Debating

Shark Tank

Hallway

Lobby

Radio Station

Broadcast Studio

For Enterprise and Higher Education

Enhance professional skills with interactive exercises, skill assessment, and advanced analytics.

All Access Program

AI-powered conversational roleplays

Unlimited practice

Personal AI career coach

No-code roleplay authoring tool

Team management and analytics

LMS integration