Professional Development Training for the Modern Workplace

Join 550,000+ professionals from leading organizations and boost your career with award-winning training on public speaking, leadership, sales, and more.

- Practice online or in virtual reality (VR)

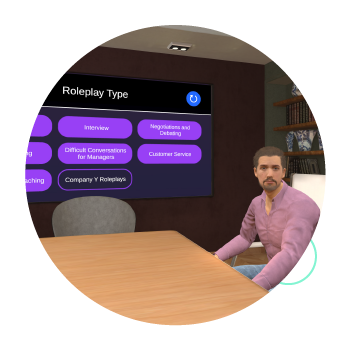

- AI-powered roleplay exercises and feedback

Online courses with practice

Award-winning, self-paced training courses designed to improve your skills in the most effective way.

We combine e-learning with interactive practice exercises so you can learn up to 4x faster.

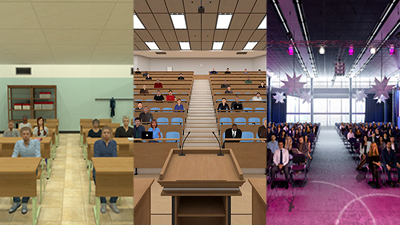

Different ways to practice

Reinforce your team's learning with engaging practice exercises that provide immediate feedback on their performance. Our interactive exercises are designed to simulate real-world scenarios, allowing your team to apply their knowledge in a practical setting.

Virtual Reality (VR)

If you have a VR headset, you can practice in virtual environments.

Online Exercises

These are completed in your browser, no download or installation required.

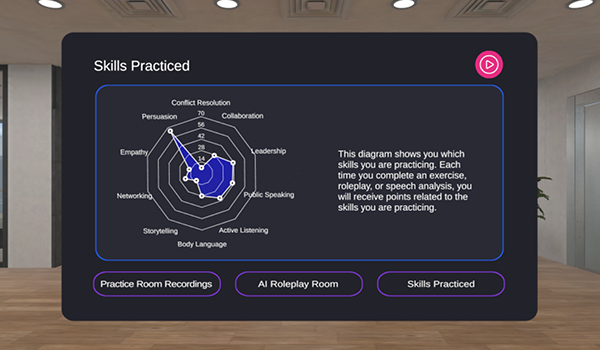

Skills Assessment dashboard

Measure the skills you or your team are practising and identify any skill gaps.

Skills Assessment combines analysis across 10 key workplace skills so you can easily pinpoint strengths and weaknesses for individuals and teams.

The learning journey

Our blended courses combine e-learning with practice and feedback.

Learn workplace skills

Courses on presentation skills, leadership, sales, and more. These teach you fundamental workplace skills.

Practice what you learn

Throughout the course, you'll apply your learning with AI-powered practice exercises, either online or in VR.

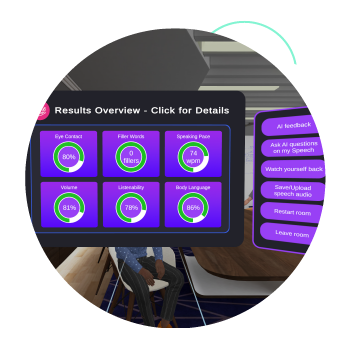

Get instant feedback

Receive instant feedback after each practice session, so you can easily identify areas to improve.

Practice difficult conversations, sales pitches, negotiations, interviews, and more.

Improve your public speaking and presentation skills with feedback on your performance.

Practice your storytelling by talking about a topic and including new words as you go.

Practice how to deliver video-conferencing presentations on Zoom, WebEx, Teams, and more.

Practice giving both positive and negative feedback to your colleagues in the workplace.

VirtualSpeech for Business

World-class training and development programs for your team, business, or organization. Read about our work with Vodafone.

20%

skills boost in just 30 minutes of practice with VirtualSpeech

100k+

learners have practiced with our AI-powered roleplay conversations

93%

of learners would recommend VirtualSpeech to a colleague

95%

said that practicing in VR helped them prepare better for real-world situations

SAM RICHARD PARKER

"VirtualSpeech gave me the confidence and the practical tools to move out of my comfort zone and embrace public speaking rather than run from it!"

JAIME DONALLY

"I found VirtualSpeech to be an incredibly innovative way to improve confidence, practice skills, and get the right feedback necessary to improve my skills."

ALEXANDRA LAHEURTE SLOYKA

"I wanted to improve my public speaking skills, and after completing the courses, I feel more prepared and have noticed an improvement in my credibility at work."

Why VirtualSpeech?

Proven Success

Join over 550,000 people across 130+ countries using VirtualSpeech to upskill themselves.

Learn by doing

With over 55 hands-on practice exercises, you'll improve your skills up to 4x faster.

AI Feedback

After each practice session, you'll get AI-powered feedback on areas you need practice.

Boost your career

Our accredited courses help you get a promotion, and progress as a manager.

| Areas | Classroom training | Self-paced e-learning | VirtualSpeech |

| Practice professional development and workplace skills | ✔ |

✔ |

|

| Social and engaging | ✔ | ✔ | |

| AI-powered practice | ✔ | ||

| Scalable for distributed teams | ✔ | ✔ | |

| Cost efficient | ✔ | ✔ | |

| Lasting behavior change | ✔ |

✔ |

VirtualSpeech All Access

Subscribe now for unlimited learning, whenever and wherever suits you.

Earn digital certificates, learn at your own pace, and get access to courses, learning paths, example videos, and much more.

Unlimited Access

- Interactive Courses

- Practice exercises with feedback

- AI and ChatGPT-enhanced exercises

- Boost your career prospects

- Cancel Anytime